Introduction

Artificial Intelligence (AI) is fundamentally changing how organizations function, innovate, and deliver value. By intelligently automating tasks, analyzing complex datasets, and enabling faster, data-driven decisions, AI empowers businesses to operate more efficiently and strategically.

As organizations increasingly integrate AI tools like Microsoft Copilot, OpenAI’s ChatGPT, and various third-party generative platforms into their operations, they often underestimate the data security implications involved. These tools interact with large volumes of corporate data to support automation, content generation, and decision-making. Without appropriate controls and visibility, this usage can expose organizations to serious risks related to data privacy and unauthorized access.

As per the Cisco 2025 Data Privacy Benchmark Study, 76% of organizations say they are concerned about data leakage when using generative AI tools like ChatGPT, Microsoft Copilot, or Google Gemini.

DSPM for AI is a framework designed to help organizations secure, monitor, and govern data usage in AI applications-especially those involving generative AI like Microsoft Copilot, ChatGPT, and Google Gemini.

DSPM for AI helps organizations oversee security and compliance in generative AI environments. This facilitates adherence to regulatory standards and enterprise security policies for tools like Microsoft 365 Copilot.

Microsoft Purview’s DSPM for AI provides a centralized platform to:

- Monitor AI usage across your organization with deep visibility into data flows and model interactions.

- Protect sensitive data from exposure to AI prompts, training inputs, or generated outputs.

- Assess data risks such as oversharing, misclassification, or unauthorized access within AI workflows.

The business imperative for DSPM for AI

- As organizations increasingly adopt generative AI tools such as Microsoft Copilot, OpenAI, and other third-party platforms, they gain remarkable opportunities for productivity and innovation.

- However, this rapid adoption brings increased data security concerns. AI systems often handle sensitive information including personal data, financial details, intellectual property, and confidential business content which significantly raises the risk of unintentional exposure or misuse.

- Another growing issue is shadow AI usage, where departments or individuals adopt unapproved AI tools without IT governance. This bypasses security protocols and creates blind spots in data monitoring, increasing the chances of data leaks and unauthorized access.

Without Data Security Posture Management (DSPM) for AI, organizations frequently lack the necessary visibility and control to understand:

- What data is being transmitted to or processed by AI tools

- Whether sensitive information is appropriately secured

- How AI usage aligns with corporate policies and legal standards

This lack of insight can lead to unintended data exposure via AI inputs or outputs. This may result in:

- The rise of unmanaged or unsanctioned AI applications

- Breaches of compliance with regulations (GDPR, HIPAA, and the EU AI Act)

- Loss of customer confidence

- Damage to brand reputation

DSPM for AI is a foundational capability for using AI securely and responsibly, enabling businesses to drive innovation while protecting critical data and maintaining regulatory compliance.

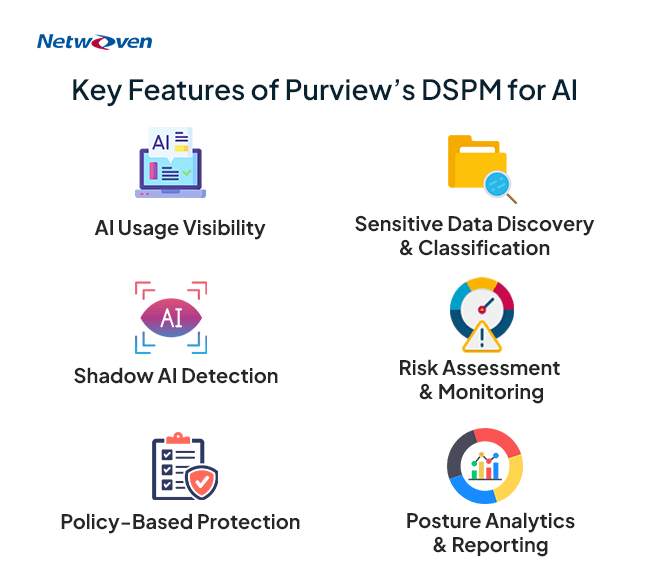

Key Features of Purview’s DSPM for AI

1. AI Usage Visibility

- Get full visibility into how AI tools like Microsoft Copilot and external generative AI services are interacting with your data across the organization.

2. Sensitive Data Discovery Across AI Workflows & Classification

- Automatically discover and classify sensitive data—such as PII, financial records, or IP—being used in AI prompts, training data, and outputs, across Microsoft and third-party platforms.

- Apply sensitivity labels (e.g., Confidential, Restricted) for policy enforcement.

3. Shadow AI Detection

- Identify and monitor the use of unapproved or unmanaged AI tools within the organization to minimize data leakage and ensure secure AI adoption.

4. Risk Assessment and Monitoring

- Continuously assess and score data risks associated with AI usage such as data oversharing, policy violations, or non-compliant access, and get actionable alerts.

5. Policy-Based Protection

- Apply built-in or tailored data protection policies to control and restrict the improper use or accidental exposure of sensitive information in AI prompts and generated outputs.

6. Posture Analytics and Reporting

- Monitor key indicators like the proportion of AI-related data that’s been classified, the frequency of AI policy breaches, and the decline in data exposure over time. Use these insights to continuously enhance your security posture and showcase the impact of your data protection efforts.

Solution Brief: Elevating AI Security with Microsoft Purview DSPM

This downloadable asset offers a quick yet comprehensive overview of today’s most critical data security challenges and how Netwoven addresses them with a strategic, phased approach.

Get the Solution BriefConclusion

The use of AI tools like Microsoft Copilot and ChatGPT is helping organizations work smarter and be more innovative. But using these tools also creates new risks, such as sensitive data being leaked, people using unapproved AI tools, and breaking privacy rules.

Microsoft Purview’s Data Security Posture Management (DSPM) for AI helps solve these problems. It gives organizations a clear view of how AI is using their data, finds and labels sensitive information, spots unapproved AI tools, checks for risks, and enforces security policies.

With Purview’s DSPM for AI, organizations can safely enjoy the benefits of AI while keeping their data secure, following regulations, and protecting their reputation. This careful approach is key to using AI responsibly and maintaining customer trust.