Introduction

In this blog, we will explain the real-time scenario of our customer who wanted to transform the content from JSON files to Azure MySQL. Azure has a great tool called Azure Data Factory which can process millions of JSON files and transform them to any data source.

There are many ways in which we can use Azure Data Factory to transform the data from source to destination locations. However, implementing the most suitable approaches is vital because if we do not use the Azure resources in an optimized manner, we will end up incurring more costs than we had planned.

Here are the Azure resources being used in implementing this process:

- Storage Account Container: This storage account is for creating a blob container to store all JSON files

- Azure MySQL Database: This is the database used to store all the JSON data in the tables.

- Azure Data Factory: This is the main resource that transforms the content from JSON files to Azure MySQL database tables.

Content transformation is the key process which requires more attention while creating it, here are some best practices we should consider:

1. Dedicated Integration Runtimes

2. Minimal Compute size

Here we have the option to select the compute size as per our load and to select the TTL.

3. Transform content using Copy Data activity

Use Copy Data to transfer the data from the source to the target location. However, there are some advantages and disadvantages.

Advantages

- It is faster

- It is a low-cost resource compared to Data Flow

- It is simple to use for transformations

Disadvantages

- It is not suitable for complex data transfers

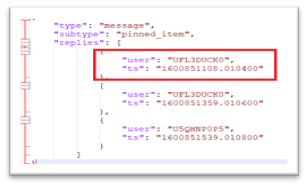

- It just copies the first array element when a JSON file has multiple array elements. For example, in the below screenshot, it just copied red box elements only and the rest were not copied. This is one of the limitations.

4. Transform content using Data Flow activity

If not performed carefully, this can turn out to be a huge challenge, as we face with our customers.

Here are the pipelines we had created

Pipeline – 1: This pipeline performs the following actions.

- It hits the SQL query to MySQL and gets the channel IDs and the channel names where the folders are identified with channel name.

- Executes the ForEach activity looping the channel records, for each channel record it executes another Pipeline-2 passing the channel name to find the respective folder in the Azure BLOB container.

Pipeline – 2: This pipeline performs the following actions

- Taking the folder name and getting the folder metadata with related JSON files in the specific folder

- Execute the foreach activity for all the JSON files in that folder. In the foreach loop, it calls the third Pipeline-3 again.

Pipeline – 3: This pipeline finally calls the Data Flow activity for each JSON file to import the JSON content to the Azure MySQL table in respective mapped columns.

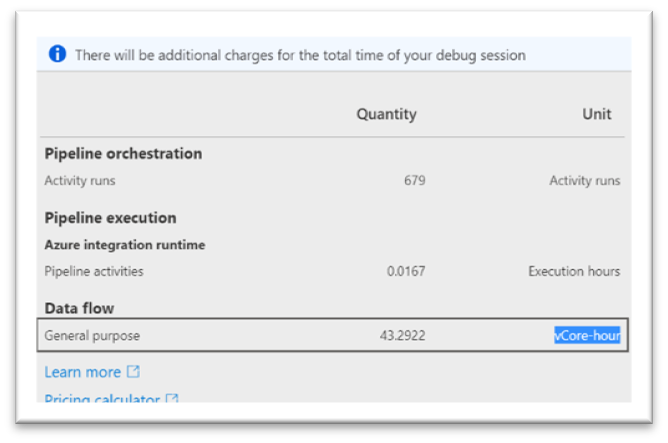

The entire process was affecting the following areas which is immense pressure for us to handle the situation.

- Execution time and performance

- Too much cost for Azure resource using ADF

Our Proposed Solution Implementation Approach

- We have a look-up activity to get channel ID and channel name and then there is ForEach activity which runs the Dataflow based on the number of channel names coming as output from look-up activity.

- The data flow uses a wildcard path to process all the files in a folder (Channel Name here) and transfers all JSON file data to a table in Azure MySQL.

The proposed pipeline is executing 50 Times Faster than the existing one. The table below explains the execution difference between the existing and proposed solutions.

| Existing Solution | New Proposed Solution |

|---|---|

| No of Folders :1 | No of Folders :1 |

| No of Files: 677 | No of Files: 677 |

| No of total rows: 2029 | No of total rows: 2029 |

| Run time: approx. 25 Minutes | Run time: approx. 30 seconds |

|  |

Conclusion

We hope this blog has helped you learn a simple and effective method to transform the content from JSON files to Azure MySQL with the help of Azure Data Factory.

At Netwoven, we leverage our decades of Microsoft technologies experience to work for your organization. Reach out to us to learn more about our services.